Kubernetes is quickly becoming the default when it comes to application deployment and management. Even though originally it was geared towards very large clusters it can also be deployed on individual devices, such as a Raspberry Pi. There's a number of Kubernetes distributions that offer all-in-one, single node clusters. I decided to have a closer look at them to see what they have to offer.

Distributions

My initial search turned towards "edge" type distributions, which should be able to run on fairly minimal amount of resources. Hence I selected the following options:

- microk8s by Ubuntu https://microk8s.io/

- k3s by Rancher https://k3s.io/

- k0s project by Mirantis https://k0sproject.io/

TL;DR

If you're looking for all-in-one easy install and you don't mind higher memory consumption and somewhat opinionated and harder to customise components - microk8s is the best option for you.

If you're looking for the smallest of them all, with absolute bare minimum in the package - k0s is the answer.

If you fall somewhere in the middle - k3s is the solution.

For the details - keep on reading.

Hardware requirements

Table below presents the official hardware requirements for each of the distributions (for a single all-in-one node).

| Parameter | microk8s | k3s | k0s |

|---|---|---|---|

| Memory (minimal) | 2GiB¹ | 512MiB² | 1GiB |

| Memory (suggested) | 4GiB | 1GiB | 1GiB |

| vCPU | 1 | 1 | 1 |

¹ value based on my experiments, not specified in documentation

² based on my tests (see below) it's not feasible - 1GiB is required

Licence, source availability and certification

| microk8s | k3s | k0s | |

|---|---|---|---|

| Licence | Apache 2.0 | Apache 2.0 | Apache 2.0 |

| Source code | Github | Github | Github |

| CNCF certified | yes | yes | yes |

Installation process

All distributions have a fairly simple installation process. It either involves downloading a shell script and running it (k3s, k0s) or executing snap command to install the software (microk8s).

Build-in features

All 3 distributions vary quite significantly when it comes to build-in (or available as a part of the distribution) features. Table below shows the key differences. If a feature is provided by an external project (but referred to in documentation) its not shown below, but mentioned in detailed comparison later.

| microk8s | k3s | k0s | |

|---|---|---|---|

| DNS | coreDNS | coreDNS | coreDNS |

| Networking | calico, multus | flannel | kube-router, calico |

| Storage | hostpath, openebs | hostpath | none |

| Runtime | containerd | containerd | containerd |

| Load-balancer | metallb | klipper-lb | none |

| Ingress | nginx, ambassador, traefik | traefik | none |

| GPU | nvidia | none | none |

| Dashboard | yes | no | no |

| Control plane storage | etcd | etcd, PosgreSQL, MySQL | etcd, sqlite, PostgreSQL, MySQL |

As it can be seen from the table microk8s offers the most features out of the box (with additional ones not listed above), whilst k0s is the bare-bones distribution, with k3s somewhere in the middle. It's worth noting that in the world of k8s adding new components is fairly trivial, so even if the distro itself doesn't support particular feature (or provides only one option) that is generally not a big issue. Having said that - having a relatively well tested set of features is still a great thing to have.

Other features

In addition to the various bundled-in software options I looked at the following features:

| microk8s | k3s | k0s | |

|---|---|---|---|

| HA/multinode option | yes | yes | yes¹ |

| Air-gapped install | yes¹ | yes¹ | yes¹ |

| IPv6 support | yes¹ | no | yes |

| ARMv7 support | yes | yes | yes |

| ARM64 support | yes | yes | yes |

¹Some manual configuration required

Architecture

microk8s

Microk8s comes as a Ubuntu snap. That snap is also dependant on the 'core' snap (which is preinstalled on Ubuntu). Most binaries are either statically linked or dynamically linked within the snap ecosystem, which resides in the /snap directory, but there's also a number of binaries, which are dynamically linked to the underlying system. All of that makes it relatively hard to determine which components come from the distro and which from the underlying system. The necessary snaps take about 1GB of disk.

k3s

K3S comes as a single binary. It installes itself into /var/lib/rancher/k3s (except for the k3s binary that ends up in /usr/local/bin. All binaries are statically linked, and generaly use busybox and coreutils for all non-kubernetes provided binaries. It doesn't need anythign from the underlying OS. It takes about 200MB of disk.

k0s

K0s comes as a single binary. That binary is self-extracting and contains the binaries necessary to run kubernetes. All components are in /var/lib/k0s. All binaries are statically linked and do not need anything from the host os. The distribution uses about 300MB of disk.

Storage

microk8s

Microk8s comes with two storage options. The first one is ahostpath-provisioner. I haven't managed to find out a lot about it, it comes from docker.io/cdkbot/hostpath-provisioner-amd64 image, with no source repo attached. There are no configuration options exposed. Changing the default storage directory can either be done by editing the running Deployment and changing the PV environmental variable or by sym-linking the default directory to somewhere else. One thing to note is that this is a static provisioner, so a PersistentVolume must be manually created before the PresistentVolumeClaim can be bound to it. It provides microk8s-hostpath StorageClass.

The second option is provided by the OpenEBS project (https://openebs.io/). This is far more flexible than the first provisioner, but comes with a somewhat surprising caveat - iSCSI must be installed and enabled on the node (even if you only intend to use the hostpath provisioner). This is dynamic provisioner and provides two StorageClasses: openebs-hostpath to use with a directory on the host (again by default microk8s selects a path under /snap/var/, which is not great and can't be changed easily - the whole package is deployed in the background using helm with all options pre-set) and openebs-device to use with a block device (which not necessarily something that is used a lot in small clusters).

k3s

K3s comes with alocal-path-provisioner by Rancher (https://github.com/rancher/local-path-provisioner). The provisioner is configurable using a ConfigMap which makes adjustments very easy. It provides local-path StorageClass.

k0s

K0s doesn't offer any persistent storage by default.

Networking

microk8s

Microk8s uses Calico networking by default. The configuration changes can be done by editing the configuration file and restarting the cluster.

k3s

K3s uses Flannel. Because of the way it works (de facto single bridge on the host) it provides the least overhead in a single-node cluster (i.e. there's no user-space processes involved in moving the packets or setting up the network).

k0s

K0S uses kube-router by default and provides an easy option to use Calico. Configuration for both options is very straightforward.

Load-balancers

microk8s

Microk8s uses MetalLb (https://metallb.universe.tf/) which is a well-established and fairly feature-rich project. The default pool can be configured when enabling the add-on.

k3s

K3s uses klipper-lb (https://github.com/k3s-io/klipper-lb) which is quite a simple one and works by mapping ports on host IPs and using iptables to forward traffic to Cluster IPs.

k0s

K0s doesn't offer any load-balancer by default.

Ingress

microk8s

Microk8s comes with two types of ingress - nginx and traefik. Both work well, but one thing I've noticed is that nginx provides an IngressClass of public instead of the usual nginx, which might lead to some issues when Ingress is used by other applications.

k3s

K3s uses traefik by default. If you don't want to use it it must be disabled during installation time (and also during upgrades).

k0s

K0s doesn't provide any ingress by default.

IPv6 support

IPv6 has been supported by mainstream kubernetes since v1.18. For it to actually work though both the networking plugin and the load-balancer (if used) must support it.

microk8s

Microk8s doesn't support IPv6 "out-of-the-box", but there's a blog post that explains how to enable it here.

k3s

K3s doesn't support IPv6, as Flannel doesn't support IPv6.

k0s

K0s supports IPv6 when using Calico. Official documentation shows the config that needs to be applied. One thing I've noticed is that it doesn't explicitly state you have to use Calico (and not kube-router).

Upgrade process

All three projects track closely the upstream Kubernetes releases, to a point where they all include the upstream release in their own release name. There are two types of upgrade process that need to be considered here. First one is a patch release upgrade (for example 1.21.1 to 1.21.2), which doesn't introduce any major changes and is generally expected to be hitless. The second one is a minor release upgrade (for example from 1.21.4 to 1.22.0), that one might be introducing some API changes, which potentially can be affecting the workloads. In addition to that there's the approach the distribution takes when it comes to the upgrade.

For distributions that offer a hitless upgrade that means that in order to take advantage of the new container runtime the workloads have to be recreated.

Testing setup

In order to test these upgrades all distributions were initially deployed using 1.20.x kubernetes release (latest available for given distribution). In each of the scenarios the same workload (cert-manager, ghost, mariadb and nginx ingress) were deployed. In addition to that I used a simple curl based script (on a different machine) to continuously download the main page of the blog. The response time and status (success or error) were recorded. In addition to that I checked the status of the pods and logs.

microk8s

Upgrade from 1.20 to 1.21 impacts the service. On the hardware I used the time the service was not available was about 4min 20seconds. The upside is that once the cluster is back and running all the runtimes are also upgraded. Managed components (like CoreDNS or ingress controller) are not upgraded by this process.

k3s

K3s offers a very easy upgrade process. The cluster control plane gets stopped (workloads continue to run), then the original installation script is ran again and the cluster control plane is started again. About a minute later the control plane is upgraded. Since the workloads are not touched by the upgrade process they have to be manually restarted later.

k0s

K0s also offers a very easy similar upgrade process to k3s, that doesn't affect the workloads.

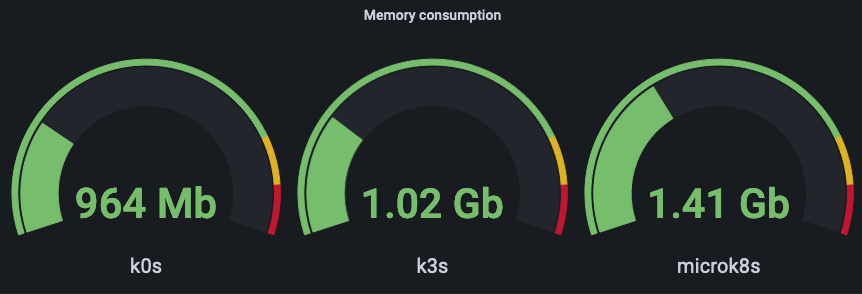

Memory consumption

For a small distribution small memory footprint is key. So I tested all 3 distributions in the same setup.

Testing setup

In order see those distributions in action I came up with a number of test workloads: an instance of Ghost blogging software, a MariaDB database, cert-manager and an nginx ingress controller. I have also installed OpenEBS to provide local storage. The instances also ran node-exporter inside the cluster for capturing the metrics with Prometheus (running on a separate machine). All the components were installed using Terraform to ensure consistency.

Tests were carried out in AWS using t3a instances. The instances were provisioned with 4GiB memory. The memory utilisation was measured as a difference between MemTotal and MemAvailable as reported by the node-exporter. The root drive was a 10GiB one. I used Ubuntu 20.04.3 LTS as the OS distribution.

The distributions were all on the most recent releases available at that time:

| microk8s | k3s | k0s | |

|---|---|---|---|

| kubernetes | v1.21.4-3 | v1.21.4+k3s1 | v1.22.1+k0s |

| containerd | 1.4.4 | 1.4.9-k3s1 | 1.5.5 |

So let's have a look at the memory consumption with just an empty cluster and only node-exporter (running as a DaemonSet).

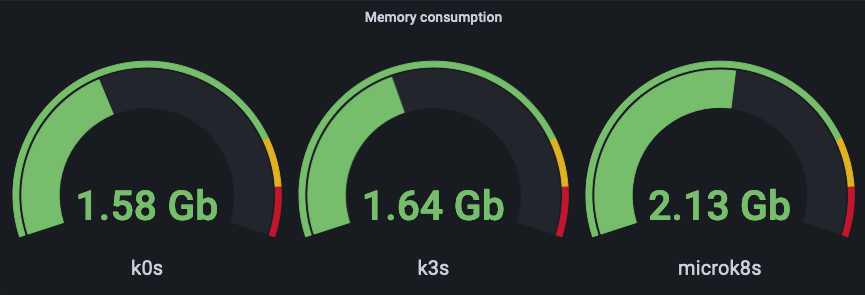

The next measurement is taken with additional components: OpenEBS (localpath only), cert-manager and nginx-ingress:

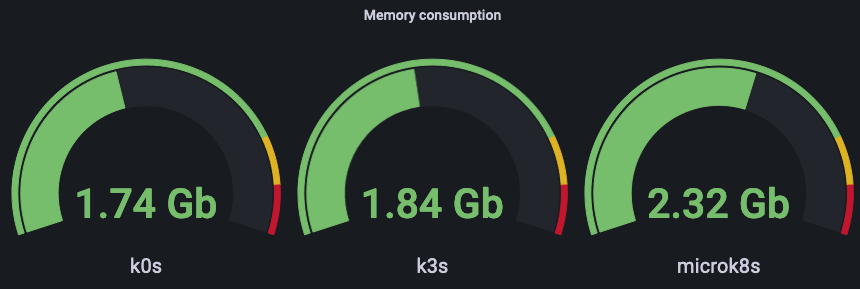

Finally - the last one - with Ghost and MariaDB:

There are clearly two tiers here: k0s and k3s on the lower end and microk8s with almost 0.5GB higher usage.

Conclusion

Microk8s is the richest small distribution. It offers a lot of additional components that can be easily enabled. But that comes at the cost of much higher overall memory footprint and need to rely on relatively inflexible components for additional features.

Both k0s and k3s provide much more streamlined distribution and offer similar experience. I personally found k0s being easier to deal with, as it doesn't offer any additional components beyond the DNS. It also seems to drop relases slightly faster than k3s.

Photo by Laura Ockel on Unsplash